Sentiment analysis of a Twitter dataset with BERT and Pytorch

In this blog post, we are going to build a sentiment analysis of a Twitter dataset that uses BERT by using Python with Pytorch with Anaconda.

What is BERT

BERT is a large-scale transformer-based Language Model that can be finetuned for a variety of tasks.

For more information, the original paper can be found here. HuggingFace documentation

First we are going to setup the python environment with anaconda.

Install Anaconda

The first step is to install Anaconda such that you can create different environments for different applications. Note the different applications may require different libraries. For example, some may require OpenCV 3 and some require OpenCV 4. So, it is better to create different environments for different applications.

Please click [here] to go to the official website of Anaconda. Then click “Download” as shown below.

Figure 1. Official website of Anaconda. [here]

Select the installer based on your OS. Assume that your OS is Windows 10 64-Bit.

Figure 2. Anaconda Installers selection page from the official website of Anaconda. Captured from [here] by author

Start to download the EXE of the installer and then follow the instructions to install Anaconda to your OS. Detailed instructions with screen captures are available at [here].

Install CUDA Toolkit (if you have GPU(s))

If you have GPU(s) on your computer and you want to use GPU(s) to speed up your applications, you have to install CUDA Toolkit. Please download CUDA Toolkit [here].

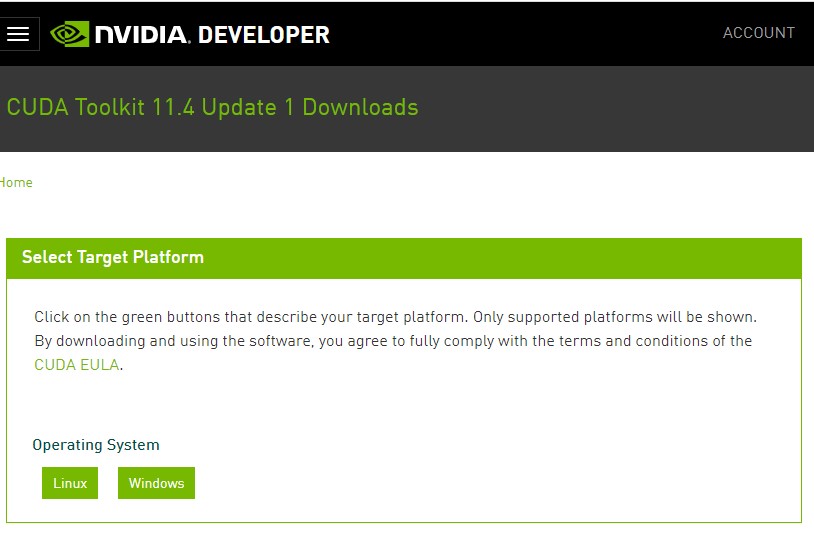

Select your Operating System, Architecture, Version, and Installer Type as shown below.

Figure 3. Select Installer for CUDA Toolkit 11.1. Captured from [here]

Click the “Download” button as shown in Figure 3 above and then install the CUDA Toolkit. The newest version of CUDA Toolkit is 11.1 at the time of writing this installation guide. Note that you have to check which GPU you are using and which version of CUDA Toolkit is applicable.

Create Conda environment for PyTorch

If you have finished Step 1 and 2, you have successfully installed Anaconda and CUDA Toolkit to your OS.

Please open your Command Prompt by searching ‘cmd’ as shown below.

By typing this line, you are creating a Conda environment called ‘bert’

conda create --name bert python=3.7

conda install ipykernel

python -m ipykernel install --user --name bert --display-name "Python (Bert)"

conda install pytorch torchvision torchaudio cudatoolkit=11.0 -c pytorch

pip install torch

pip install pandas

pip install tqd

pip install scipy

pip install joblib

pip install transformers

pip install ipywidgets

If youw are interested to use images in your project you can install OpenCV library for image pre/post-processing

conda install -c conda-forge opencv

and nstall Pillow library for reading and writing images

conda install -c anaconda pillow

later we can create the a folder and there we activate our enviroment

conda activate bert

jupyter notebook&

and we create the a jupyter notebook

Exploratory Data Analysis and Preprocessing

We will use the SMILE Twitter dataset.

Wang, Bo; Tsakalidis, Adam; Liakata, Maria; Zubiaga, Arkaitz; Procter, Rob; Jensen, Eric (2016): SMILE Twitter Emotion dataset. figshare. Dataset. https://doi.org/10.6084/m9.figshare.3187909.v2

we create a folder called data you can download here

mkdir data

we can download the dataset by using bash with wget command

bash

wget https://github.com/ruslanmv/Deep-Learning-using-BERT/raw/main/Data/smile-annotations-final.csv

exit

then in the Jupiter notebook we can type the following:

import torch

import pandas as pd

from tqdm.notebook import trange, tqdm

# TDQ is a A Fast, Extensible Progress Bar for Python and CLI

for i in trange(10):

print(i)

0%| | 0/10 [00:00<?, ?it/s]

0

1

2

3

4

5

6

7

8

9

We check if the GPUs are available

torch.cuda.is_available()

True

df = pd.read_csv('Data/smile-annotations-final.csv',

names =['id', 'text', 'category' ])

df.set_index('id', inplace=True)

df.text.iloc[0]

'@aandraous @britishmuseum @AndrewsAntonio Merci pour le partage! @openwinemap'

df.category.value_counts()

nocode 1572

happy 1137

not-relevant 214

angry 57

surprise 35

sad 32

happy|surprise 11

happy|sad 9

disgust|angry 7

disgust 6

sad|disgust 2

sad|angry 2

sad|disgust|angry 1

Name: category, dtype: int64

df = df[~df.category.str.contains('\|')]

df = df[df.category!= 'nocode']

df.category.value_counts()

happy 1137

not-relevant 214

angry 57

surprise 35

sad 32

disgust 6

Name: category, dtype: int64

It is unbalanced

possible_labels = df.category.unique()

label_dict = {}

for index, possible_label in enumerate(possible_labels):

label_dict[possible_label]= index

label_dict

{'happy': 0,

'not-relevant': 1,

'angry': 2,

'disgust': 3,

'sad': 4,

'surprise': 5}

df['label'] = df.category.replace(label_dict)

df.head()

| text | category | label | |

|---|---|---|---|

| id | |||

| 614484565059596288 | Dorian Gray with Rainbow Scarf #LoveWins (from... | happy | 0 |

| 614746522043973632 | @SelectShowcase @Tate_StIves ... Replace with ... | happy | 0 |

| 614877582664835073 | @Sofabsports thank you for following me back. ... | happy | 0 |

| 611932373039644672 | @britishmuseum @TudorHistory What a beautiful ... | happy | 0 |

| 611570404268883969 | @NationalGallery @ThePoldarkian I have always ... | happy | 0 |

df['text'].iloc[0]

'Dorian Gray with Rainbow Scarf #LoveWins (from @britishmuseum http://t.co/Q4XSwL0esu) http://t.co/h0evbTBWRq'

Training/Validation Split

from sklearn.model_selection import train_test_split

df.index.values

array([614484565059596288, 614746522043973632, 614877582664835073, ...,

613678555935973376, 615246897670922240, 613016084371914753],

dtype=int64)

df.label.values

array([0, 0, 0, ..., 0, 0, 1], dtype=int64)

X_train, X_val, y_train, y_val = train_test_split(

df.index.values,

df.label.values,

test_size=0.15,

random_state=17,

stratify=df.label.values

)

X_train

array([614767094345936896, 610755488372948992, 610609791073931266, ...,

613744184495894529, 610873494910443520, 610741907426267136],

dtype=int64)

y_train

array([0, 0, 0, ..., 0, 0, 2], dtype=int64)

df['data_type']= ['no_set']*df.shape[0]

X_train

array([614767094345936896, 610755488372948992, 610609791073931266, ...,

613744184495894529, 610873494910443520, 610741907426267136],

dtype=int64)

df.loc[X_train, 'data_type']='train'

df.loc[X_val, 'data_type']='val'

df.groupby(['category','label','data_type']).count()

| text | |||

|---|---|---|---|

| category | label | data_type | |

| angry | 2 | train | 48 |

| val | 9 | ||

| disgust | 3 | train | 5 |

| val | 1 | ||

| happy | 0 | train | 966 |

| val | 171 | ||

| not-relevant | 1 | train | 182 |

| val | 32 | ||

| sad | 4 | train | 27 |

| val | 5 | ||

| surprise | 5 | train | 30 |

| val | 5 |

Loading Tokenizer and Encoding our Data

from transformers import BertTokenizer

from torch.utils.data import TensorDataset

tokenizer = BertTokenizer.from_pretrained(

'bert-base-uncased',

do_lower_case=True

)

df.data_type=='train'

id

614484565059596288 True

614746522043973632 True

614877582664835073 True

611932373039644672 True

611570404268883969 True

...

611258135270060033 True

612214539468279808 True

613678555935973376 True

615246897670922240 True

613016084371914753 True

Name: data_type, Length: 1481, dtype: bool

df[df.data_type=='train']

| text | category | label | data_type | |

|---|---|---|---|---|

| id | ||||

| 614484565059596288 | Dorian Gray with Rainbow Scarf #LoveWins (from... | happy | 0 | train |

| 614746522043973632 | @SelectShowcase @Tate_StIves ... Replace with ... | happy | 0 | train |

| 614877582664835073 | @Sofabsports thank you for following me back. ... | happy | 0 | train |

| 611932373039644672 | @britishmuseum @TudorHistory What a beautiful ... | happy | 0 | train |

| 611570404268883969 | @NationalGallery @ThePoldarkian I have always ... | happy | 0 | train |

| ... | ... | ... | ... | ... |

| 611258135270060033 | @_TheWhitechapel @Campaignforwool @SlowTextile... | not-relevant | 1 | train |

| 612214539468279808 | “@britishmuseum: Thanks for ranking us #1 in @... | happy | 0 | train |

| 613678555935973376 | MT @AliHaggett: Looking forward to our public ... | happy | 0 | train |

| 615246897670922240 | @MrStuchbery @britishmuseum Mesmerising. | happy | 0 | train |

| 613016084371914753 | @NationalGallery The 2nd GENOCIDE against #Bia... | not-relevant | 1 | train |

1258 rows × 4 columns

df[df.data_type=='train'].text.values

We have to encode the texts by using tokenizer.batch_encode_plus

encoded_data_train = tokenizer.batch_encode_plus(

df[df.data_type=='train'].text.values,

add_special_tokens=True,

return_attention_mask=True,

#pad_to_max_length=True,

padding=True,

truncation=True,

max_length=256,

return_tensors='pt'

)

encoded_data_val = tokenizer.batch_encode_plus(

df[df.data_type=='val'].text.values,

add_special_tokens=True,

return_attention_mask=True,

# pad_to_max_length=True,

padding=True,

truncation=True,

max_length=256,

return_tensors='pt'

)

For the train

input_ids_train = encoded_data_train['input_ids']

attention_masks_train = encoded_data_train['attention_mask']

labels_train = torch.tensor(df[df.data_type=='train'].label.values)

For the validation

input_ids_val = encoded_data_val['input_ids']

attention_masks_val = encoded_data_val['attention_mask']

labels_val = torch.tensor(df[df.data_type=='val'].label.values)

It is created the TensorDataset adapted to Bert for the train and validation

dataset_train = TensorDataset(

input_ids_train,

attention_masks_train,

labels_train

)

dataset_val = TensorDataset(input_ids_val,

attention_masks_val,

labels_val

)

len(dataset_train)

1258

len(dataset_val)

223

Setting up BERT Pretrained Model

from transformers import BertForSequenceClassification

model = BertForSequenceClassification.from_pretrained('bert-base-uncased',

num_labels=len(label_dict),

output_attentions=False,

output_hidden_states=False)

Creating Data Loaders

from torch.utils.data import DataLoader, RandomSampler, SequentialSampler

#In Google Colab -- GPU Instance (k80)

#batch_size =32

#epoch =10

batch_size = 4 #32

dataloader_train = DataLoader(

dataset_train,

sampler=RandomSampler(dataset_train),

batch_size=batch_size

)

dataloader_val = DataLoader(

dataset_val,

sampler=SequentialSampler(dataset_val),

batch_size=batch_size

)

Setting Up Optimizer and Scheduler

from transformers import AdamW, get_linear_schedule_with_warmup

optimizer = AdamW(

model.parameters(),

lr=1e-5, #2e-5 > 5e-5

eps=1e-8

)

epochs = 10

scheduler = get_linear_schedule_with_warmup(

optimizer,

num_warmup_steps=0,

num_training_steps=len(dataloader_train)*epochs

)

Defining our Performance Metrics

Accuracy metric approach originally used in accuracy function in this tutorial.

import numpy as np

from sklearn.metrics import f1_score

#preds=[0.9 0.05 0.05 0 0 0]

#preds = [1 0 0 0 0]

def f1_score_func(preds, labels):

preds_flat = np.argmax(preds, axis =1 ).flatten()

labels_flat = labels.flatten()

return f1_score(labels_flat, preds_flat, average='weighted')

def accuracy_per_class(preds, labels):

label_dict_inverse={v: k for k, v in label_dict.items()}

preds_flat = np.argmax(preds, axis =1 ).flatten()

labels_flat = labels.flatten()

for label in np.unique(labels_flat):

y_pred = preds_flat[labels_flat== label]

y_true = labels_flat[labels_flat== label]

print(f'Class:{label_dict_inverse[label]}')

print(f'Accuracy:{len(y_pred[y_pred==label])}/{len(y_true)}\n')

Creating our Training Loop

Approach adapted from an older version of HuggingFace’s run_glue.py script. Accessible here.

import random

seed_val = 17

random.seed(seed_val)

np.random.seed(seed_val)

torch.manual_seed(seed_val)

torch.cuda.manual_seed_all(seed_val)

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

model.to(device)

print(device)

cuda

Assuming valX is a tensor with the complete validation data, The usual approach would be to wrap it in a Dataset and DataLoader and get the predictions for each batch.

Also, to save memory during evaluation and test, you could wrap the validation and test code into a with torch.no_grad() block.

for evaluation and test set the code should be:

with torch.no_grad():

model.eval()

y_pred = model(valX)

val_loss = criterion(y_pred, valY)

and

with torch.no_grad():

model.eval()

y_pred = model(test)

test_loss = criterion(y_pred, testY)

def evaluate(dataloader_val):

model.eval()

loss_val_total = 0

predictions, true_vals = [], []

for batch in tqdm(dataloader_val):

batch = tuple(b.to(device) for b in batch)

inputs = {'input_ids': batch[0],

'attention_mask': batch[1],

'labels': batch[2],

}

with torch.no_grad():

outputs = model(**inputs)

loss = outputs[0]

logits = outputs[1]

loss_val_total += loss.item()

logits = logits.detach().cpu().numpy()

label_ids = inputs['labels'].cpu().numpy()

predictions.append(logits)

true_vals.append(label_ids)

loss_val_avg = loss_val_total/len(dataloader_val)

predictions = np.concatenate(predictions, axis=0)

true_vals = np.concatenate(true_vals, axis=0)

return loss_val_avg, predictions, true_vals

for epoch in tqdm(range(1, epochs+1)):

model.train()

loss_train_total = 0

progress_bar = tqdm(dataloader_train,

desc='Epoch {:1d}'.format(epoch),

leave=False,

disable=False)

for batch in progress_bar:

model.zero_grad()

batch = tuple(b.to(device) for b in batch)

inputs ={

'input_ids' :batch[0],

'attention_mask':batch[1],

'labels' :batch[2]

}

outputs = model(**inputs)

loss = outputs[0]

loss_train_total += loss.item()

loss.backward()

torch.nn.utils.clip_grad_norm_(model.parameters(), 1.0)

optimizer.step()

scheduler.step()

progress_bar.set_postfix(

{'training_loss': '{:.3f}'.format(loss.item()/len(batch))})

#torch.save(model.state_dict(),f'Models/BERT_ft_epoch{epoch}.model')

tqdm.write('\nEpoch {epoch}')

loss_train_avg= loss_train_total/len(dataloader_train)

tqdm.write(f'Training loss:{loss_train_avg}')

val_loss, predictions, true_vals = evaluate(dataloader_val)

val_f1= f1_score_func(predictions,true_vals)

tqdm.write(f'Validation{val_loss}')

tqdm.write(f'F1 Score (weigthed): {val_f1}')

torch.save(model.state_dict(),f'Models/BERT_ft_epoch{epoch}.model')

0%| | 0/10 [00:00<?, ?it/s]

Epoch 1: 0%| | 0/315 [00:00<?, ?it/s]

Epoch {epoch}

Training loss:0.7975574296973055

0%| | 0/56 [00:00<?, ?it/s]

Validation0.6059155837699238

F1 Score (weigthed): 0.762975916339145

Epoch 2: 0%| | 0/315 [00:00<?, ?it/s]

Epoch {epoch}

Training loss:0.4435813750036889

0%| | 0/56 [00:00<?, ?it/s]

Validation0.5948948868151221

F1 Score (weigthed): 0.8381931883679935

Epoch 3: 0%| | 0/315 [00:00<?, ?it/s]

Epoch {epoch}

Training loss:0.2983576275445225

0%| | 0/56 [00:00<?, ?it/s]

Validation0.5538139296175879

F1 Score (weigthed): 0.8431542233760785

Epoch 4: 0%| | 0/315 [00:00<?, ?it/s]

Epoch {epoch}

Training loss:0.18270550284845133

0%| | 0/56 [00:00<?, ?it/s]

Validation0.5259785884367635

F1 Score (weigthed): 0.8662434969638529

Epoch 5: 0%| | 0/315 [00:00<?, ?it/s]

Epoch {epoch}

Training loss:0.11218177344158499

0%| | 0/56 [00:00<?, ?it/s]

Validation0.6620622687145702

F1 Score (weigthed): 0.8535941751228626

Epoch 6: 0%| | 0/315 [00:00<?, ?it/s]

Epoch {epoch}

Training loss:0.05948421741458809

0%| | 0/56 [00:00<?, ?it/s]

Validation0.6958074946513599

F1 Score (weigthed): 0.8637082897172584

Epoch 7: 0%| | 0/315 [00:00<?, ?it/s]

Epoch {epoch}

Training loss:0.04381906234674037

0%| | 0/56 [00:00<?, ?it/s]

Validation0.7028247105024222

F1 Score (weigthed): 0.8623919844487555

Epoch 8: 0%| | 0/315 [00:00<?, ?it/s]

Epoch {epoch}

Training loss:0.028735312574546753

0%| | 0/56 [00:00<?, ?it/s]

Validation0.7281462288156035

F1 Score (weigthed): 0.8645974679453454

Epoch 9: 0%| | 0/315 [00:00<?, ?it/s]

Epoch {epoch}

Training loss:0.02450285246531065

0%| | 0/56 [00:00<?, ?it/s]

Validation0.7339509774070133

F1 Score (weigthed): 0.8674038975615305

Epoch 10: 0%| | 0/315 [00:00<?, ?it/s]

Epoch {epoch}

Training loss:0.021128401538405183

0%| | 0/56 [00:00<?, ?it/s]

Validation0.7412530884700702

F1 Score (weigthed): 0.8648362667790782

When saving a general checkpoint, to be used for either inference or resuming training, you must save more than just the model’s state_dict. It is important to also save the optimizer’s state_dict, as this contains buffers and parameters that are updated as the model trains. Other items that you may want to save are the epoch you left off on, the latest recorded training loss, external torch.nn.Embedding layers, etc. As a result, such a checkpoint is often 2~3 times larger than the model alone.

To save multiple components, organize them in a dictionary and use torch.save() to serialize the dictionary. A common PyTorch convention is to save these checkpoints using the .tar file extension.

Loading and Evaluating our Model

model = BertForSequenceClassification.from_pretrained(

"bert-base-uncased",

num_labels=len(label_dict),

output_attentions=False,

output_hidden_states=False)

When we are loading the bert-base-cased checkpoint (which is a checkpoint that was trained using a similar architecture to BertForPreTraining) in a BertForSequenceClassification model.

This means that:

The layers that BertForPreTraining has, but BertForSequenceClassification does not have will be discarded The layers that BertForSequenceClassification has but BertForPreTraining does not have will be randomly initialized. This is expected, and tells you that you won’t have good performance with your BertForSequenceClassification model before you fine-tune it 🙂.

This warning means that during your training, you’re not using the pooler in order to compute the loss. I don’t know how you’re finetuning your model, but if you’re not using the pooler layer then there’s no need to worry about that warning.

len(label_dict)

6

In PyTorch, the learnable parameters (i.e. weights and biases) of an torch.nn.Module model are contained in the model’s parameters (accessed with model.parameters()). A state_dict is simply a Python dictionary object that maps each layer to its parameter tensor.

# Print model's state_dict

#print("Model's state_dict:")

#for param_tensor in model.state_dict():

# print(param_tensor, "\t", model.state_dict()[param_tensor].size())

# Print optimizer's state_dict

#print("Optimizer's state_dict:")

#for var_name in optimizer.state_dict():

# print(var_name, "\t", optimizer.state_dict()[var_name])

device = torch.device('cuda')

pass

model.to(device)

pass

# Make sure to call input = input.to(device) on any input tensors that you feed to the model

PATH='./Models/BERT_ft_epoch10.model'

model.load_state_dict(torch.load(PATH,

map_location=torch.device('cuda:0')))

<All keys matched successfully>

When loading a model on a GPU that was trained and saved on GPU, simply convert the initialized model to a CUDA optimized model using model.to(torch.device(‘cuda’)). Also, be sure to use the .to(torch.device(‘cuda’)) function on all model inputs to prepare the data for the model. Note that calling my_tensor.to(device) returns a new copy of my_tensor on GPU. It does NOT overwrite my_tensor. Therefore, remember to manually overwrite tensors: my_tensor = my_tensor.to(torch.device(‘cuda’)).

_, predictions, true_vals = evaluate(dataloader_val)

0%| | 0/56 [00:00<?, ?it/s]

accuracy_per_class(predictions, true_vals)

Class:happy

Accuracy:161/171

Class:not-relevant

Accuracy:20/32

Class:angry

Accuracy:8/9

Class:disgust

Accuracy:0/1

Class:sad

Accuracy:2/5

Class:surprise

Accuracy:2/5

You can download this notebook here

Congratulations! we were able to build a Deep Learning with Pytorch and BERT.

Leave a comment